It’s been three or four years since I wrote it, and I have it on decent authority that the system isn’t in active usage anymore so I think it’s safe to discuss the hub and spoke encryption system I wrote.

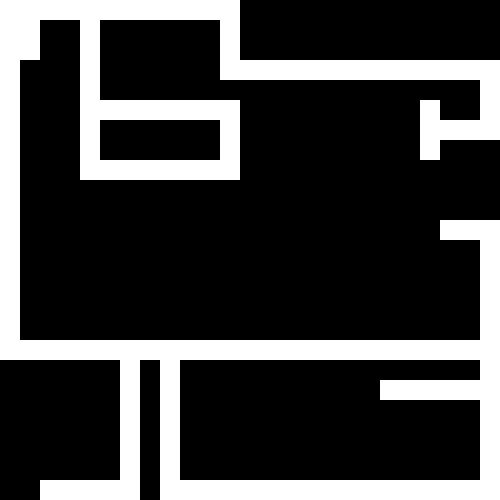

If you’re not familiar with the hub and spoke idea, my understanding of it is that when you are creating encrypted data stores it’s best to ensure that no single target has all the information needed to decrypt sensitive data. In a traditional encryption setup this is done by storing the data in a central store, the hub, and the spokes are the clients accessing the data, they are closest to the outside of the network, and the easiest to compromise, so the only thing they store is the keys to decrypt the data.

Ideally the hub should not be connected to the internet at all, and can only be accessed via a direct connection. A well configured database server probably already follows this architecture. The websites/apps connected to the database don’t have direct access to the information. Instead they store access credentials and then request information from the database as they need it. This ensures that the data doesn’t reside in the website in a way that is directly accessible. In order to access the data attackers would have to compromise both the website with the credentials and the database itself. That said, accessing the database on most websites is pretty trivial. Database passwords are very frequently just stored in the file system unencrypted. And if you have access to the website, you have the direct connection needed to access the data.

The Problem

The company I was working for at the time did repair on devices. In order to facilitate this repair process we could ask customers for their user credentials. This way technicians could log in for virus scans, reconfigurations, or full reinstalls as the situation required. The issue with this was data storage. As more and more devices got connected to the internet, as more and more services started requiring stronger and stronger passwords, the likelihood of the same username/password combo getting you into a phone or laptop and someone’s banking information gets higher and higher. Storing this information in plaintext in a database was getting more and more irresponsible, no matter how many times we would recommend customers change their passwords once they got their devices back.

So, we needed to protect their data while still allowing our technicians to store the credentials with the relevant tickets.

The Solution.

An inverted hub and spoke.

Rather than storing the data in a central store, we would ask our central store to generate us a single use encryption key that would be generated from the domain that requested the key, the time of the request, and a salt of random data generated by the central store. This key would be transferred to the requesting party through a double encrypted connection.

Inside a traditional TLS connection, the client and server would negotiate a second level of encryption via the same principles as TLS encryption, just higher in the network stack. Inside that double encrypted connection the central store would send over the encryption key which itself had been encrypted with an encryption key provided by the client at the time of the request.

The client would then store the encrypted data and the key for the encrypted key. The central store would store the encrypted keys. In theory this meant that neither client nor central store could do anything useful with the encrypted information they held. Instead they would need to coordinate to turn the encrypted information into something useful.

And, on top of that. Whenever data was decrypted, it would be re-encrypted with another key so that if a key was successfully scraped from a log or packet sniffing, it would most likely be invalid by the time it could be used.

The Issues.

If you looked at the last section and said “that’s a lot of encryption”, you have already seen the major pitfall of the scheme.

It was incredibly fragile. This was by design. My belief was that by requiring this much authentication you would deter anyone attempting to harvest data without employing significant effort. You had to have a valid api key which could be revoked at any time. You had to be advertising the same domain as you were when the data was encrypted. You had to be advertising the same IP address. You had to know the time the request was made at. You had to know the key associated with the key you were requesting. You had to know how to negotiate the encrypted connection to actually get the key in a usable form. You had to know how to read the resulting data to pull out a variably positioned random salt in all of the encrypted keys designed to make them useless if you didn’t know it was there.

If you missed any of these steps you would have garbage. Useless garbage.

Which is great, that’s exactly what should happen with encrypted data if you don’t have the keys to open it. But I was naive about the bane of every developer on earth. Time keeping differences. All of the encryption/decryption keys for the encrypted connection between servers were based on the current server time. If the connection took to long, if the hub and spoke didn’t agree on the time, they would be sending information back and forth that they had no way of knowing was different than the other side.

The downfall of the scheme was that I rolled my own double encrypted connection. It worked pretty reliably, but all it took was a brief second where the server was under load so that a request took a little too long to process and then suddenly the data would be encrypted with an unintended key that would render it inert, irrecoverable.

It didn’t help that our network admin had a tendency to change static IPs and advertised domains without telling anyone, but the worst issue was the reliance on time.

Lessons

I think a lot of the principles and choices were sound. I play around with revisiting this pretty regularly. To this day vogon uses a rolling salt for user passwords. Every time you log in the salt and hash are regenerated to make it harder to reverse engineer your password if the hash and salt are grabbed at different times.

Honestly, I’m still pretty proud of the scheme, even if it was made useless by something fairly predictable. I think rather than relying on the client and store to agree on a time, I’d have the client timestamp the request. While this does mean that there’s more key information in transit should you successfully decrypt both levels of encryption, there’s enough redundancy that I don’t know that anything practical is lost by doing that.

Additionally I’d want to revisit the way I was communicating the salt position. The salt was randomly generated and of a random length. But at the time the best way I could communicate to the decryption process where the salt was and how long it was, was to append coordinates. If you know what those numbers were and where to find them, it was trivial to remove the salt.

In my experience, despite all the math, engineering, and cleverness that goes into security, most of it boils down to making it so frustrating to break that an attacker moves onto an easier target. Most of the things that get exploited are flaws that are fairly common. The exploits that get really interesting are the ones found for the really big targets. Huge attack surfaces, or those with the biggest most valuable data stores.

I doubt my scheme would be good enough to protect the information banks need to store, but I know it was good enough to keep someone’s cellphone pin out of the hands of someone attempting to prey on a single repair shop.